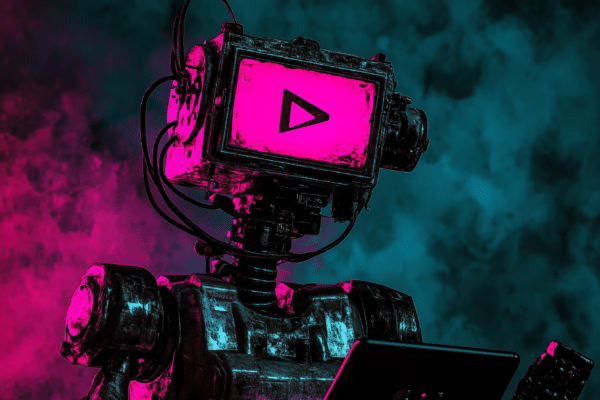

In the rapidly evolving world of artificial intelligence, ensuring that AI systems are robust against toxic outcomes is a challenge. A recent study presented a novel method called Curiosity-Driven Red Teaming (CRT), which aims to improve the detection and containment of undesirable behaviors in AI models before they are deployed.

Traditional Red Teaming methods in AI have primarily focused on testing the AI's decision-making ability using predefined scenarios. However, these methods often need to be revised when they encounter unpredictable toxic or harmful content. The CRT approach departs from this by introducing an exploration-driven mechanism that proactively searches for potential flaws in AI responses, focusing primarily on toxic responses.

Training techniques

Curiosity-driven exploration enables the creation of various test scenarios that mirror complex real-world interactions. In this way, AI models are exposed to and trained on a wider range of potential inputs, including those that could trigger unwanted or toxic responses. This method identifies existing vulnerabilities and helps to fine-tune the AI's responses so that they are more in line with ethical and societal norms.

Conventional redteaming techniques often rely on a limited number of scenarios that do not adequately cover the diverse possibilities of human-AI interaction.

When implementing CRT, various parameters need to be adjusted, such as sampling and the addition of an entropy bonus that encourages the generation of unique and novel test cases. This approach ensures a more thorough evaluation of AI behavior.

The strategic implementation of CRT proves to be crucial for the development of reliable and safe AI systems. By effectively identifying areas where AI could exhibit toxic or harmful behaviors, developers can refine AI models to better handle such scenarios, increasing the safety and reliability of the technologies.