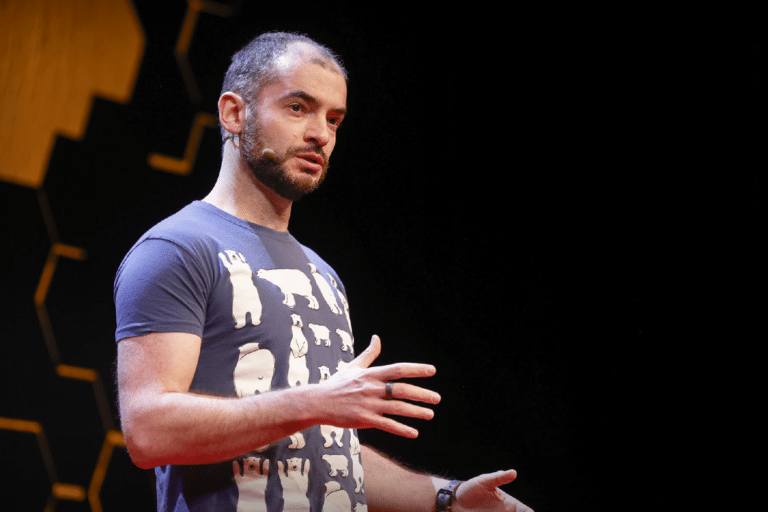

Ilya Sutskever, co-founder and former chief scientist of OpenAI, has raised 1 billion dollars for his artificial intelligence company, Safe Superintelligence Inc (SSI). This values the start-up at an estimated 5 billion dollars in just over three months of its existence. The fundraising was led by investors such as NFDG, a16z, Sequoia, DST Global and SV Angel.

SSI appears to be a direct competitor to Sutskever's former company OpenAI, albeit with a slightly different objective. According to its website, SSI's goal is to safely develop an AI model that is more intelligent than humans. It could therefore also compete with Anthropic, another company founded by former OpenAI employees, which also wants to build safe AI models.

SSI differs so far in its claim that it will only develop one product: a safe superintelligence.

Both OpenAI and Anthropic have products like ChatGPT and Claude, but neither fits the definition of "superintelligence" coined by philosopher Nick Bostrom in 2014.

In this book, Bostrom speaks of an AI system with cognitive abilities that far surpass those of the most intelligent human. At the time, he predicted that such an entity could emerge as early as "the first third of the next century"

There is no scientific definition or defined measure of this "superintelligence" but, given the context, it is reasonable to assume that SSI is committed to research and development until it can bring out an AI model that is demonstrably more capable than the average human.