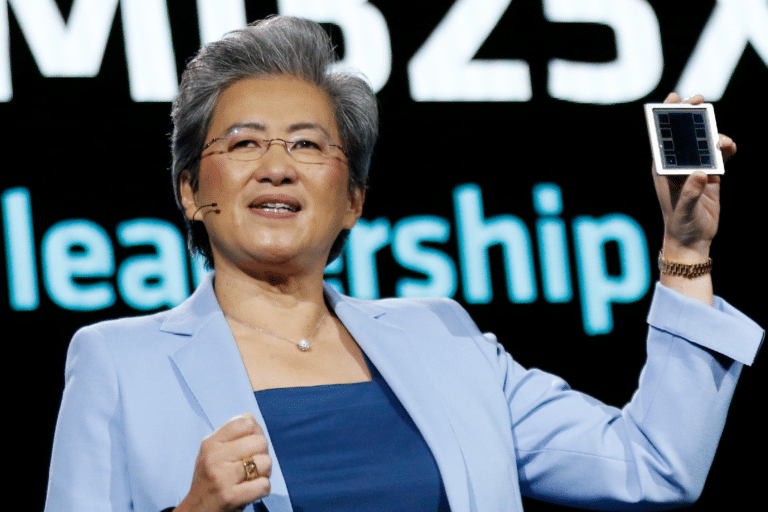

AMD has unveiled its latest chip, the Instinct MI325X, an artificial intelligence accelerator designed to compete directly with NVIDIA in the AI-driven data center market. Based on the CDNA 3 architecture, this new component is positioned as a high-performance alternative to its competitor's more advanced products. According to AMD, the MI325X outperforms NVIDIA's H200 model by up to 30% in AI-intensive tasks.

Instinct MI325X is designed to deliver exceptional performance and efficiency in artificial intelligence applications, including training, optimization, and model inference. The GPU has 304 computing units (CUs) and supports up to 256 GB of HBM3E memory with a bandwidth of 6.0 TB/s, positioning it as a formidable tool for accelerating AI models in large-scale data centers.

In terms of theoretical performance, the MI325X achieves a maximum precision of 1307.4 TFLOPS in FP16 and BF16 and 2614.9 TFLOPS in FP8, while in integers (INT8) it achieves 1,978.9 TOPS. In addition, benchmarks such as Mistral 7B, Llama 3.1 70B, and Mixtral 8x7B indicate that the MI325X significantly outperforms NVIDIA's H200, reinforcing AMD's claims about its chip's superiority in inference tasks.

Availability

During the new chip's presentation, AMD also confirmed that production of the MI325X will begin before the end of 2024. At the same time, the company has secured partnerships with leading manufacturers such as Dell, Gigabyte, HP Enterprise, Lenovo, SuperMicro, and Eviden to integrate the accelerator into their data center systems. The MI325X is expected to be commercially available during the first quarter of 2025.

The launch of the Instinct MI325X was accompanied by a clear roadmap for AMD's next releases. The company plans to introduce the MI350 series, based on the cDNA four architecture, during the second half of 2025, followed by the MI400 series, which will use a next-generation architecture in 2026. AMD said that the line of AI accelerators has become the fastest-growing product category in the company's history.

The MI350 is shaping up to be a significant evolution over the MI325X. It offers a 35x improvement in inference performance and up to 288GB of HBM3E memory per accelerator. These enhancements will strengthen AMD's presence in the competitive AI market.

Outlook

AMD is also committed to strengthening its accelerators' support for the main AI libraries and models, such as Meta's Llama 3.2 and Stable Diffusion 3. In addition, Instinct accelerators already support over a million language models on the Hugging Face platform, underlining AMD's effort to provide developers with a robust and efficient environment.

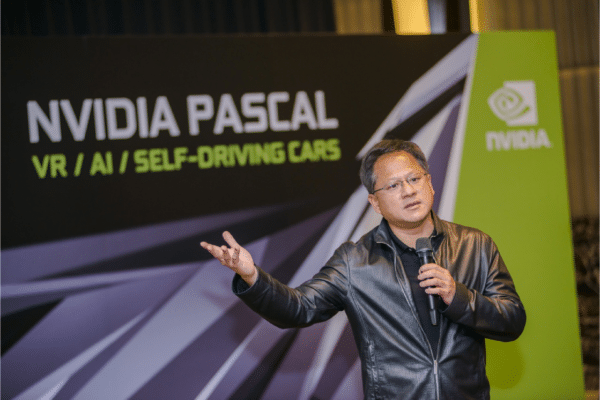

AMD's strategy is not only to compete with NVIDIA in terms of performance but also to offer a total cost of ownership advantage by providing a favorable cost-performance ratio. This can translate into superior performance at the same price as the competition or equivalent capacity at a lower cost. However, AMD has not yet disclosed the price of the MI325X, although it is expected to be lower than the $28,900 (approximately 26,428 euros) price of NVIDIA's H200, reinforcing its focus on competitiveness.